Dynamics of Corporate Governance Beyond Ownership in AI

Dynamics of Corporate Governance Beyond Ownership in AI

Executive Summary

As hype around Artificial Intelligence (AI) reaches fever pitch, Big Tech has only cemented its control over the development and use of these new technologies. This is not simply exercised through ownership — acquiring AI start-ups, for example — but increasingly involves complex alternative ways of exerting control.

As this report shows, the mechanisms at play include:

- Using corporate venture capital to get preferential access to capabilities, knowledge and information and steer the direction of start-ups’ R&D.

- Entrenching a dominant position through providing cloud services, leaving other organisations no alternative outside paying Big Tech. This includes offering cloud credits which direct start-ups and academics to “spend” this investment in particular ways such as building applications on top of AI models owned by Big Tech.

- Capturing the majority of AI talent, either directly or indirectly by paying academics to work part-time for Big Tech even as they retain an academic affiliation.

- Influencing the direction of research by setting the priorities at conferences and shaping perceptions of what is seen as the frontier of AI.

While open source is sometimes positioned as a source of countervailing power for Big Tech, this has also been co-opted — Big Tech companies open source certain pieces of their software or models, as when Meta made its Large Language Model (LLM), Llama, open source. This allows them to both benefit from a community of developers willing to work for free on the code and make possible improvements, but also makes it easier for developers to build applications on their own models and software, cementing their dominant position.

What is needed is genuine alternatives to for-profit AI, ones oriented towards people and planet. This needs to be underpinned by opening up datasets — including Big Tech datasets — where this will support collective good, as well as a public research institution to facilitate large-scale collaboration.

[.green]1[.green] Introduction: The OpenAI Saga

On 17 November 2023, OpenAI’s CEO and co-founder Sam Altman was fired. The scandal took a sudden turn as Microsoft offered shelter to Altman and those wanting to leave the company with him. This saga, which ended with Altman reinstated as CEO on 22 November, made explicit something that the tech industry already knew: OpenAI has been a Microsoft satellite since 2019 when Microsoft invested its first $1 billion in the AI start-up.

Back in 2019, OpenAI was already an AI forerunner alongside DeepMind. The latter had been acquired by Google in 2014. In exchange for its investment, Microsoft got privileged access to OpenAI’s large language models (LLMs) and a chance to steer what they were working on. This came with a change in OpenAI’s legal structure from a non-profit to a “capped-profit” company, meaning a for profit company with a limit on returns from investments. The changes led some OpenAI researchers to quit and form Anthropic, a start-up now backed by Google.

OpenAI released ChatGPT after an additional injection of $2 billion and a push from Microsoft to halt the development of more advanced LLMs to work on an application of its existing model. Microsoft had access to ChatGPT technology months ahead of its release, embedding it into its software ahead of rivals.

ChatGPT’s massive success led Microsoft to commit an additional $10 billion investment in OpenAI. Today, Microsoft owns 49 per cent of OpenAI. The larger the latter’s business, the better for Microsoft. It can even sell ChatGPT and other software as an OpenAI service to rival companies that would be more reluctant to purchase them directly from Microsoft, like Salesforce.

Investing in without acquiring OpenAI proved to be a more favourable decision for Microsoft than Google’s acquisition of DeepMind. It allowed Microsoft to expand its AI services’ sales base and, at least until the attempt to fire Altman, it diverted attention from regulators, keeping public concern at bay. Microsoft’s CEO Satya Nadella even claimed publicly that the new wave of AI was not privileging incumbents like Microsoft but entrants like OpenAI, without mentioning that the latter operates as its satellite.

Why is this story relevant beyond corporate intricacies? What does it tell us about corporate governance in the digital age? The first lesson is that control and ownership do not go hand in hand, as explained in further detail in Section Two. The second lesson is that, while mergers and acquisitions still matter, corporate venture capital is not merely a strategy to make financial gains. This is explained in Section Three with a detailed analysis of selected tech companies’ venture capital investments and a zoom into who is funding AI start-ups. It may still be argued that corporate venture capital is a way to control with partial — even if not full — ownership. Section Four digs into the so-called public cloud as an arena of control beyond ownership for Amazon, Microsoft and Google. Section Five analyses the role of Big Tech in the planning of frontier AI. Section Six examines whether open sourcing AI could be a solution to Big Tech dominance. This report ends in Section Seven offering some guidelines for a development and use of AI for the public and nature good, against an AI that reinforces polarisation, inequalities and corporate control.

[.green]2[.green] Control Beyond Ownership

Discussions about control and ownership in corporate governance typically focus on the relationship between management and owners. However, in the tech sector it is increasingly common to list tech companies with dual class voting shares. This means that the company has at least two types of stocks. Their only difference is that one class gives more voting power per share, and is reserved for founders, top executives and sometimes, early investors.

Google’s parent company Alphabet is a prominent example. Its Class A shares give one vote per stock, its Class B shares give ten votes per share and its Class C shares give no voting power at all. By 31 December 2023, Google founders owned 86.5 per cent of their company’s Class B shares, which represented 51.5 per cent of total voting rights, despite Class B shares representing only 6.99 per cent of total Alphabet shares.[1]

Meta, Palantir, Pinterest, Snap, Square and Zoom all also use a two-tier system for their stocks, and going public with a dual class stock is becoming a more frequent strategy among tech companies. Jay Ritter’s data on IPOs since 1980 show that dual class listings in the tech sector remained below 15 per cent until 2005, growing to more than 30 per cent of all the tech companies listed in 2015. In the 2020s, this figure has always remained above 40 per cent.

Dual class voting shares split shareholders between a majority that owns most of the company but has relatively much less — or even no — capacity to control it, and a ruling minority. But beyond the relationship between shareholders, founders and executives, control can also be exerted at the organisational level, as some corporations control production and extract profits from assets that are legally owned by other organisations.

This form of control is rooted in the distinction between property and accumulation that is already implicit in the legal form of the corporation. Since the end of the nineteenth century, corporations can — fully or partially — own other corporations. Due to limited liability, the parent company is not responsible for the liabilities of its subsidiaries, or the subsidiaries of its subsidiaries and so on. Multinational corporations were created as bundles of — even hundreds of — separate entities registered in different jurisdictions. Corporations as legal entities were always different from the economic concept of a single multinational enterprise. Legally speaking, the multinational corporation as a single entity never existed, despite headquarters exerting unified control over all the subsidiaries.[2]

While the parent company is the ultimate owner of at least part of the other entities’ shares, other forms of controlling different organisations or legal entities beyond ownership have emerged since the 1970s, underpinned by the concentration of intangible assets. Franchising is a standard practice of control beyond ownership that ballooned in the hospitality industry. The franchisor owns the brands, often protected with trademarks, as well as the business practices, patented machinery and registered designs. All this is imposed to franchises at prices decided by the franchisor, who also takes a share of the gross revenue of every franchisee.[3]

Global value chains operate along similar lines even if the facade of every enterprise does not look alike. They are governed by leading corporations that concentrate the exclusive knowledge on how to reintegrate the chain, (i.e. who can do what and how) and capture value in the form of intellectual rents paid by those integrating the chain in subordinated positions.[4] The relation between platform companies and complementors — such as app developers and providers of cloud services — replicates the same pattern. The platform not only captures value but also defines how apps or services must be produced and priced based on its control of crucial intangibles (see Section Four).

In all these architectures, the subordinated companies accumulate capital partly for the leading corporation that secured privileged access to intangible assets required for the overall production process. These leaders manage and curate the flows of information and knowledge between the parties. As a standard practice, they also outsource steps of the production of new knowledge to other institutions (universities, start-ups, etc.) while retaining control over how to recombine and make sense of all these pieces. Overall, knowledge is produced by many, but a few disproportionately harvest associated profits captured from other organisations and consumers in the form of intellectual rents.

Intellectual rents are an extraordinary profit that are supposed to last until those in the same industry adopt the innovation that triggered them or another (new) firm innovates and takes the lead. However, intellectual rentiership is reinforced by secrecy (as in the case of big data and secretly kept algorithms) and intellectual property rights (IPRs). We speak of intellectual monopolisation to describe the perpetuation of a practice in which many co-create value and co-produce knowledge that ends up disproportionately captured by a few giants.[5] Intellectual monopolies’ economic concentration relies on their concentration of those intangible assets. In simple terms, these are companies that accumulate to a significant degree by turning knowledge and data co-produced with (or by) many others into their own intangible assets. This is precisely what is happening with AI.

[.green]3[.green] Corporate Venture Capital

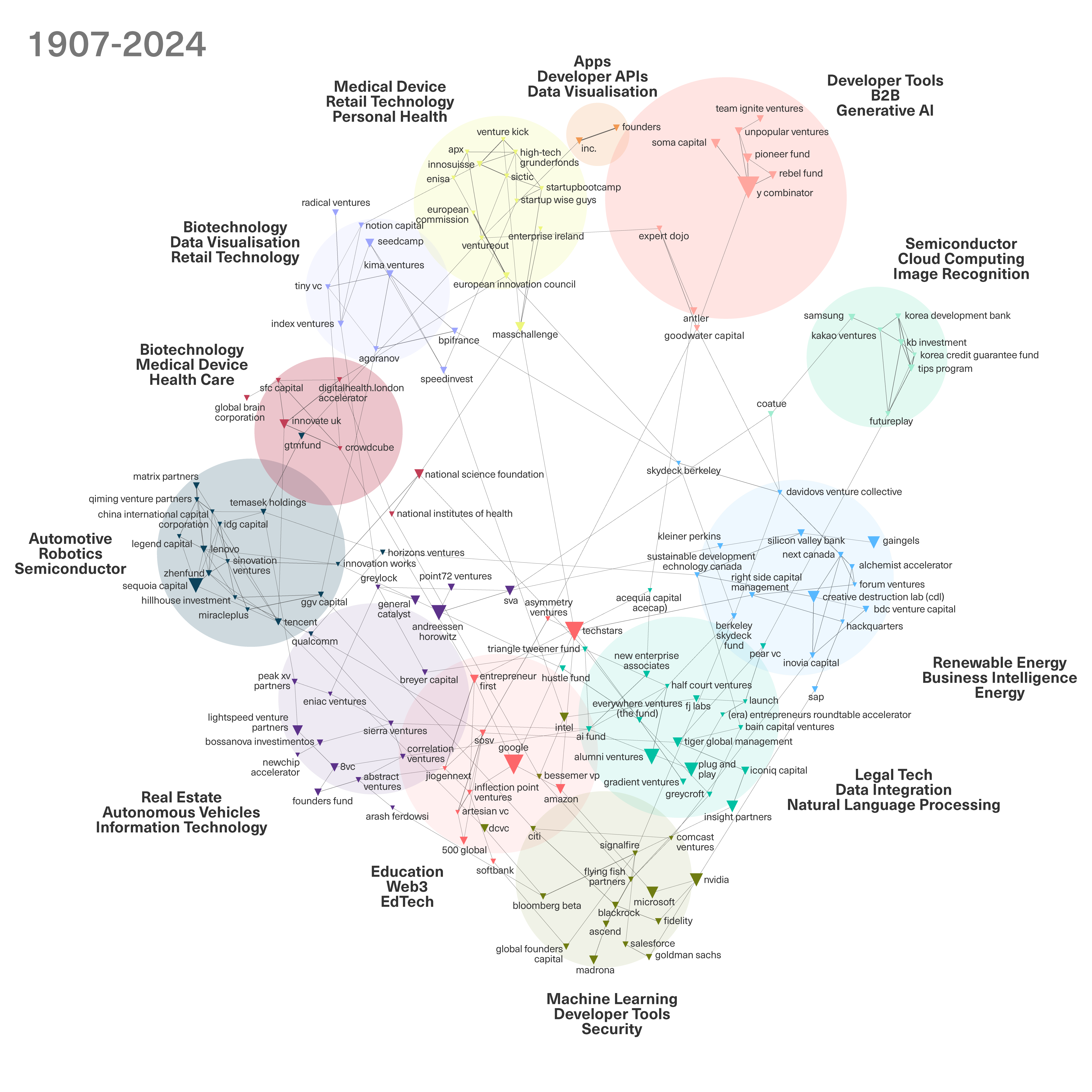

Google, Microsoft and Amazon are actively investing in hundreds of start-ups, prioritising AI. In 2023, they poured more money into the AI start-up world than any venture capitalist. Together, they invested two-thirds of the $27 billion raised. These companies invest across a wide range of AI applications: large tech companies at least partly control start-ups. The place of these giants in the whole venture capital system in terms of the number of funded companies is apparent in the network of funders appearing more frequently among the top five investors of every AI company that received venture capital investments since ChaptGPT was released (see Figure 1, more information on corporate venture capital and a methodological note presented at the end of the report). Figure 1 also provides information of the technologies that are more frequently developed by the companies funded by each cluster of investors.

Figure 1 does not provide information on amounts invested but does show the widespread influence of large tech companies. These companies are not concentrating investments in only a few start-ups; instead, Figure 1 shows that, except for the clusters on AI applications for healthcare, at least one tech giant is present in every cluster, indicating that they are controlling most of the AI start-ups’ universe.

Judging by the focus of the companies funded in each cluster, large tech companies are more interested in controlling the companies developing foundational or more generic AI and those working on applications for industries other than healthcare — renewable energies, smart cities and buildings, robotics and semiconductors. Samsung privileges investments in companies working on AI and semiconductors, SAP favours companies applying AI to renewable energies and the Chinese giant Tencent invests more prominently on firms developing automation and robotics. Microsoft, just like Intel and Nvidia, remains focused on funding more general-purpose machine learning. Meanwhile, Google and Amazon prefer AI companies working on applications for education and blockchain. For more information on the network of corporate venture capital, see the figure and methodological note at the end of this report.

In the whole sample analysed of AI companies receiving venture capital since ChatGPT was released, Google ranks third in terms of the number of companies it funds (see Table 1). Only the pre-seed investor Techstars and the accelerator Y Combinator are ahead of Google, with Y Combinator primarily investing in AI start-ups outside large tech companies’ areas focus (see its place in Figure 1) while Techstars mainly funds companies working on applied AI rather than general or foundational models.

[.fig]Table 1: Top 25 AI Start-Up Investors[.fig]

[.notes]Source: Author’s analysis based on Crunchbase. Data retrieved by February 2024 for all the companies that received funding since the release of ChatGPT.[.notes]

Microsoft ranks tenth in Table 1. Judging by the reasons stated when it recently confirmed its investment in the French AI start-up Mistral, which referred to diversifying investments in AI start-ups as a way to compensate for its large stake in OpenAI, it is highly likely to see Microsoft climbing positions in this ranking in the short run. Mistral, far from being an independent player before agreeing with Microsoft, already had Nvidia and Salesforce among its top funders. Between Google and Microsoft, major venture capitalists, including Sequoia Capital, fill out most of the rest of the list of top funders. The sole example of public funding for AI companies in the top ten is the UK’s national innovation agency, Innovate UK.

In short, acquisitions are not the only way for large tech companies to access start-ups’ intangibles and talent while keeping potential rivals at bay. A more hidden yet prevalent form of domination involves the use of corporate venture capital to get preferential access to capabilities, knowledge and information and a chance to steer start-ups’ research and development. Just like acquisitions, this is a useful mechanism to get potential future returns from those investments while preventing start-ups from becoming rival. To some degree, this resonates with the view of Andrew Ng, managing general partner of the venture capital firm AI Fund and former founder of Google Brain and Chief AI scientist at Baidu. He explains in many talks that his investments are mostly concentrated in the application layer that basically depends on large and established tech companies and grows within the latter clouds (see Section Four). In fact, a general takeaway from the analysis of the AI start-ups is that they are mostly applying AI rather than developing foundation models that would compete with the large tech companies.

Joining as investors also allows large tech companies a direct line to a start-up’s founder(s) or CEO. This is useful if they plan to become high value clients or suppliers, using fundings to quickly integrate the start-up into their sphere of control. For Nvidia and the three cloud giants, they can double dip by getting the money back when the company spends on infrastructure, which means purchasing Nvidia GPUs or directly renting GPUs while using other services from Big Tech clouds.

[.green]4[.green] Controlling from the Clouds

Microsoft’s investments in OpenAI were not simply in cash. Part of the money was a cloud credit for processing power. Since 2019, Microsoft’s cloud Azure trains and runs OpenAI’s models. Phil Waymouth, Microsoft’s senior director of strategic partnerships, stated after the success of ChatGPT that this breakthrough had been achieved thanks to the “shift from large-scale research happening in labs to the industrialisation of AI”. AI is not only made of code or algorithms; it is an outcome of combining data, code and compute. There is no AI if one of these three parts is missing, and most of the ownership of the compute power is concentrated by three US Big Tech clouds.

In a misuse of the term public, the so-called public cloud is a highly concentrated and profitable private business. Amazon’s AWS, Microsoft and Google, in that order, control 65 per cent of the cloud computing market globally. AWS leads with a stable share of 32 to 34 per cent, while Microsoft and Google have been growing faster than Amazon’s cloud recently, at the expense of other, smaller players. Between 2017 and 2023, Microsoft’s market share grew from between 12 and 13 per cent to 22 per cent of the world market. Google also almost doubled its market share, taking 11 per cent of the market by 2023. Accordingly, Google Cloud revenues doubled between 2020 and 2022. While AWS is Amazon’s most profitable business, Google still declares a deficit for its cloud, partly due to the company’s efforts to maintain low prices in order to capture market share. The only major cloud providers outside the US are in China where Alibaba is the fourth largest provider globally.

The public cloud is a global outsourcing architecture where organisations pay for accessing a computing service. Broadly speaking, four types of services are offered on the cloud: software as a service (SaaS), platforms as a service (PaaS), infrastructure as a service (IaaS) and data as a service (DaaS). Organisations of all sizes in multiple sectors, from start-ups to large corporations, are increasingly moving their computing operations to the cloud; by 2025, 45 per cent of the world’s data storage will be on the cloud.

The driver for firms to migrate to the cloud is that it turns what could be a significant capital expenditure into a variable and extremely flexible cost. IaaS means that companies and other organisations pay for what they consume in terms of storage and processing power, which varies with the macroeconomic and specific business and industry cycles. While these services deconcentrate tangible capital for most, they result in a higher concentration of tangible assets for Amazon, Microsoft and Google. According to Bloomberg data for 2021, the cloud capital expenditure of these companies was between 9 and 12 per cent of their cloud revenues. These giant cloud providers have enclosed digital spaces and capture an infrastructure rent from leasing their use. This can be compared to the rents charged by urban and rural landowners.[6]

IaaS is already a very lucrative business, and it is developed together with the other cloud services. The additional cost of selling SaaS to another customer — its marginal cost — is close to zero because the same lines of code are sold again and again. The price basically reflects an intellectual rent garnered by keeping code secret.

Cloud hegemons offer growing sets of AI services in their clouds that assist the development of AI field-specific applications. These services make AI adoption faster and easier but also riskier. When the SaaS products are machine learning algorithms (including the frontier generative AI that powers intelligent chatbots), the more they are lent, the more they will process data, thus learning and self-improving and reinforcing cloud giants’ digital leadership. This is because machine learning today became a synonym of deep learning, an AI approach in which the algorithm gets better — makes better predictions — the more data it processes.[7]

For organisations which cannot or choose not to train their own models, cloud giants also offer pre-configured virtual machine templates to train the AI algorithms. Microsoft used OpenAI’s compute needs to advance Azure’s training environments, developing a standardised solution now offered to other customers as a service for training and using chatbots and other custom AI solutions.

Public clouds also offer DaaS and data preparation tools that put data in a format suitable for processing by algorithms. DaaS refers to access to standardised databases, such as image or text datasets. AWS hosts a marketplace for data called Data Exchange, which by November 2023 had over 3,500 datasets on offer from more than three hundred data providers. As with code, the same dataset can be sold again and again without much additional labour.

The principle of SaaS, DaaS and PaaS is always the same: selling each service as many times as possible with a price that mainly reflects a rent. A further advantage for cloud giants is that, with the exception of open-source solutions offered as a cloud service, all these services are black boxes. Companies using these services cannot learn by using these digital technologies because they pay only for use, not for access to the intangibles on the cloud.

So, why do start-ups, and other companies, migrate to or directly develop their solutions on these clouds? For start-ups, the cloud not only means flexibility in the face of economic uncertainties. They also do not have the disposable funds to invest in a datacentre and employees to maintain and code the more general pieces needed to run their specific solutions. In other cases, they face difficulties in finding the people that can develop frontier AI (see Section Five).

The proliferation of start-ups around the world relies on having different elements of computing available as a service precisely because it reduces entry barriers. The downside is that all these start-ups are born as endless rent payers. Their whole business strictly depends on Big Tech and, unlike larger players that can and are exploring alternatives to counterbalance these giant corporations’ dominance,[8] there is no way out for start-ups.

This problem is even more acute where start-ups sell their offerings as cloud services, which incurs a further fee. On AWS Data Exchange, for example, companies are charged a fee every time their services are purchased. By January 2023, AWS marketplace had 6,783 registered partners associated with 178 qualifications (specific classes of cloud services within SaaS, PaaS and so on).[9] Those offering in these marketplaces are called partners by the cloud giants, but the relationship is everything but a partnership among equals. These partners are mostly small enterprises but there are also large multinationals, like Hitachi and IBM, and major consultancy companies like Accenture and Deloitte, that also offer cloud services on Big Tech platforms.

Barracuda Networks, a cybersecurity software company, was the first to offer SaaS on AWS marketplace. By 2023, it was offering 49 different products on this cloud. At the other end of the spectrum, 2,374 companies offer only one computing service on AWS. Because most of these companies only sell computing services, their turnover is completely dependent on Big Tech.

Amazon — like the other cloud giants — dictates every movement of cloud service providers on AWS even if these are formally independent companies. Amazon defines rules to be followed for setting prices and sets maximum annual price increases. Price changes must be reviewed and approved by Amazon. The process takes between one and three months. AWS also recommends how and when to charge for the use of a service.[10] This includes the provision of standardised licensing contracts to be signed each time a customer purchases a service with contract pricing on its marketplace. A contract pricing consists of upfront pricing to customers to buy the use of a service (a license) for a set amount of time. And, as in Amazon’s e-commerce marketplace, listing a product on AWS marketplace is free but there is a 30 per cent transaction fee.

There is also a strong complementarity between the different types of cloud services that further favours Big Tech. Data stored on the cloud needs to be crunched, which will require compute power — a separate cloud service — and algorithms. While companies may write their specific algorithms for the latter and run them on the cloud, more often than not, they write the code already introducing generic SaaS offered on the cloud. Likewise, if a company wants to use a SaaS, it will need to do it on the cloud, which means also consuming, thus paying for, compute power. When it comes to pricing all these services, IaaS is relatively more expensive, in particular for processing big data, while SaaS and DaaS are cheaper and sometimes even free, as I explain next. This is a marketing and business strategy that is particularly beneficial for Big Tech because they own the infrastructure, thus keep all the returns of the more expensive service, while part of the SaaS and the DaaS are often developed by these third-party players for which Big Tech get only the transaction fee.

Cloud credits: locking-in disguised as venture capital

Cloud giants offer cloud services for free to attract more clients. By 2023, AWS offered ten free machine learning services for between 1 and 12 months. They ranged from services that turn text to speech and vice versa to services for building and deploying machine learning models. AWS still offers Amazon Rekognition, a facial recognition tool that in 2019 was found to be racial and gender biased.

Credits can be interpreted as angel or venture capital money that is targeted to specific uses, directing new firms to work on SaaS and, lately, to build AI applications on top of the models controlled by Big Tech and their satellite companies. These compute vouchers foster migration to the cloud. For a company the size of Amazon, Microsoft or Google, expanding their marketplace by providing credits to start-ups that not only use their cloud but will probably end up offering their services on their clouds is both a source of direct revenues and long-term consolidation, since the larger the marketplace, the more likely that more companies will choose that cloud. AWS also hosts start-up competitions and funds winners with additional cloud credits.

A specific type of cloud credit is offered to academics for processing data with AI or using other computing services. AWS Cloud Credit for Research program, as its name implies, grants free cloud credits to conduct a research project using AWS. In 2018, the last year with public information, Amazon gave 387 of these credits to 216 organisations. These were highly concentrated: 49 credits were granted to the University of California and 32 to Harvard. Just nine organisations received five or more credit grants. Besides universities, other public research organisations have also made use of the cloud: the US National Science Foundation has a multi-cloud strategy for hosting its research but nevertheless favours US Big Tech as only authorised suppliers. The EU also eventually partnered with US Big Tech for the development of a European cloud based on GaiaX, Europe’s federated data infrastructure. Whether the UK’s AI Research Resource is set independent to cloud giants remains to be seen. What is clear is that the £300 million committed by the UK government for this facility is around half of what Google was spending per data centre in 2022.

Additionally, AWS has special calls for institutions from semi-peripheral countries. It signed agreements with Argentina’s National Research Council (CONICET), Pontifica Universidad Católica del Perú and Brazil’s National Council for Scientific and Technological Development (CNPq) for joint calls for research proposals. While local firms in Latin America tend to be (very) late adopters of new technologies, leading academic institutions have teams at the global research frontier of their fields, which are currently being pushed to expand AI.

Cloud credits represent an extremely low additional costs for Big Tech. Most computing services are the same lines of proprietary code that can be resold many times over. In terms of processing power or storage capacity, having small projects consuming a very minor portion of Big Tech’s colossal infrastructure has very low opportunity costs. Furthermore, cloud credits let Amazon, Microsoft and Google identify, and thus purchase or copy, successful projects.

[.green]5[.green] Big Tech Control of AI Research

As mentioned above, AI depends on a combination of data, compute and code or algorithms. All of them are under Big Tech control. While Big Tech have been freely harvesting society’s data from their platforms and other sources (see Section Seven on how they have accessed public healthcare data) and have concentrated most of the compute in their clouds, code is not only produced inside Big Tech R&D laboratories. Coding generally refers to the writing of advanced mathematical algorithms. These models or algorithms are sets of rules or instructions to be followed for problem-solving operations or to perform a computation.

Although their original businesses differed, US Big Tech research and development (R&D) has become more and more focused on advancing AI models. Already by 2017, Amazon was the company offering the greatest number of jobs in AI in the US, followed by Nvidia and Microsoft. Big Tech leads the world ranking of Business Expenditure on R&D (BERD); only the US and China have a public expenditure on R&D above each of the five US Big Tech companies (Amazon, Alphabet (Google), Meta, Microsoft and Apple, in order of greatest BERD) (BERD data are available at European Commission, Joint Research Centre, 2023).[11]

AI scientists and engineers working for Big Tech can be seen as a bridge between AI co-production and appropriation, because Big Tech not only develops AI in-house but also works with universities, public research organisations and other firms in joint research projects. These collaborations result in thousands of co-authored papers but only a dozen co-owned patents, because most of the thousands of patents of Big Tech are exclusively owned (Rikap & Lundvall, 2020, 2022).[12]

Often, AI talent is drained from academia. Gofman and Jin found high and exponentially growing levels of brain drain of AI professors from US and Canadian universities into industry, with Google, Amazon and Microsoft hiring the largest number of AI scholars, followed by Meta, Uber and Nvidia.[13] Meta even kept on hiring people for AI-related positions while conducting massive layoffs.[14]

While young prominent scientists usually accept full-time jobs at Big Tech after their PhDs or postdocs, several senior scholars have gotten a juicier deal: they remain affiliated to their academic institution, keeping the prestige and the students that work with them and bring fresh ideas, and they are offered a millionaire compensation package to also work part-time for a Big Tech firm. Analysis of a sample of all the presentations made at the 14 most prestigious AI conferences between 2012 and 2020 found around 100 universities and public research organisations with scholars that had such double affiliations with Amazon, Microsoft, Google or Meta. They were mostly concentrated in the US, but institutions from Europe, China, Canada, Hong Kong and Israel also have scholars with these deals. Compared to other Big Tech firms, Google and Microsoft have more of these double affiliations.[15]

This allows Big Tech companies to control AI research. Judging by citations, Jurowetzki et al. observed that Microsoft and Google are the most influential organisations in the AI research community.[16] More generally, Klinger et al. found that AI research conducted by the private sector, in particular by tech giants specialising in what they defined as “data-hungry and computationally intensive deep learning methods” is guiding the whole AI community.[17]

Since 2012, Big Tech, especially Google and Microsoft, has increasingly participated in major AI conferences. They lead in the number of presentations, the number of other organisations co-authoring research with them and broadly regarding their place in the resulting global network of co-authorships. A driver for being active in these academic events is to identify and recruit AI scientists. Another motivation is the capacity to orchestrate what organisations from around the world perceive as the frontier in AI, fostering the whole field to work on Big Tech priorities.

Network analysis shows how central Big Tech firms have become to setting the terms of AI research. Looking at the network of organisations most frequently presenting research in AI conferences between 2018 and 2020 (the last period in my sample), Google and Microsoft emerge as playing the most important intermediating role, with the capacity to influence organisations that are not directly collaborating with them but with others that do have direct ties, or others that are linked to others that are co-authoring with Big Tech. Occupying comparatively less prominent positions, Amazon, Meta and several Chinese Big Tech also increase their presence and centrality in the network over time.[18]

US Big Tech companies are also prominently represented in the committees of these conferences. NeurIPS, the main machine learning annual conference, is an extreme case. Every Big Tech has at least one member in its organising committee. Google, who got the largest number of accepted papers in this academic convening in 2022,[19] had nine of the 39 committee members. By joining these committees, tech giants get to define what papers will be accepted or win prizes, which is a sign of power to shape the field. Everywhere you look, Big Tech controls the AI system.

[.green]6[.green] Is Open Source the Solution?

One could think of open source as a possible alternative to the entrenched position of Big Tech in the AI field. Open source is a way to produce knowledge collaboratively and voluntarily, and to benefit communities at large. It arose from the need to subvert knowledge privatisation and assetisation from giants like Microsoft and IBM in the 1980s and 1990s.

Copyleft (as opposed to copyright) is a licencing structure that states that every software using and adapting free software must also be free, which meant that it could not be integrated into paid software.[20] But, at a free software summit, this movement voted for “open source” as their new name, narrowing down the scope of free software. The idea of open source paved the way for different licencing alternatives to copyright that did not go as far as copyleft. The most popular one is Apache, which is more business friendly because, unlike copyleft, it is possible to charge a price for the software using open source.

Since the early days of the free software movement, large tech companies have gone from enemies — with Microsoft, Apple and IBM all at one time against open source — to advocates of open source, with Microsoft itself together with Google and Meta presenting themselves as big advocates. This reflects the fact that they have found ways to control the open-source community and profit from it without owning resulting products. Microsoft acquired GitHub, a leading software development platform, for $7.5 billion in 2018. This marked a turning point in Microsoft’s control over open source, allowing Microsoft to harvest code from all GitHub’s open-source projects and put together a dataset of code to train its co-pilot AI solution.

Simultaneously, Microsoft and other Big Tech firms have released some of their solutions as open source. The advantage to doing so is that open-source code is improved by developers from around the globe, most of whom are not employees. By 2018 on GitHub, Microsoft’s vscode,[21] Facebook’s react-native[22] and Google’s TensorFlow were among the most popular open-source projects in terms of number of users contributing to the code; 59 per cent, 83 per cent and 41 per cent of each project’s contributors did not work for Microsoft, Facebook and Google, respectively. By 2023, Microsoft’s vscode remained the top open-source project by number of contributors on GitHub. The 2023 top eight ranking included two other projects open sourced by Microsoft, one of them focused on deployments on the cloud, and a Google software development kit for building apps called Flutter.[23]

Another benefit of putting projects in open source springs from the specific characteristics of the software community. Developers and engineers are, generally speaking, strong supporters of open sourcing. Large tech companies want the best developers and scientists to work for them, directly as employees or indirectly in the forms described in Section Five. By allowing their employees to contribute to this community, Big Tech increases their satisfaction, and also supports collaborations with academia as well as scientists and engineers from other companies.

Furthermore, Big Tech decide what is put on open source to further enhance their sphere of control. For some Big Tech firms, open sourcing became a crucial part of their business models. It enables them to sell complementary products or, even more profitably, create an ecosystem on top of the open-sourced solution. By controlling necessary complements, Big Tech expands control and profits without additional ownership.

The classic example is Google’s Android. Android is open source, which was crucial for its wide adoption by smartphone manufacturers to the point which it became the operating system used in every smartphone except iPhones. Open sourcing — and the fact that Google negotiated with manufacturers to pre-instal Google Play, Android’s applications’ marketplace — attracted developers to code apps that run on top of Android. App developers take the economic and innovation risks and are then locked in Google Play, paying a fee to Google for every sale made or for every ad sold if the app is free.

In the long-run, successful open sourcing is standard setting in action. As their models become industry standards, Big Tech companies reinforce their leadership using open source to signal consensus in the developers’ community. The goal is always to find ways to profit that are not directly selling the technology put in open source but maximising its usage and then leverage on it for making profits.

Google made its software library for machine learning, TensorFlow, an industry standard by open sourcing it. Its usage expanded widely, becoming almost a standard for coding, favouring developments that complement perfectly with Google products. The same has happened with PyTorch, a machine learning framework open sourced by Meta. This strategy expands the number of developers that produce apps and other complementary products that can run on Big Tech platforms, with their clouds as a prominent example, because their coding frameworks are the mainstream. Standard setting is also dynamic and by introducing new open-source solutions that outpace their originally published software, Big Tech keeps the lead. In other cases, Big Tech puts in open-source pieces of knowledge that are not the core of their advantages, including modules that potential rivals could be developing too. Open sourcing is a way to neutralise them.

Some of the LLMs that followed ChaptGPT were open sourced. In this case, open-source means sharing the model parameters but not the training data or what led to include or exclude parameters, thus preventing rivals from fast imitation. The most prominent examples are Llama models, Meta’s LLMs. Although open sourcing initially resulted from an accidental leak of the first version of Llama, it quickly became Meta’s business model.

Meta’s CEO Mark Zuckerberg has explained the reasoning behind the decision to keep Llama in open source: it means developers work for free on Llama providing early feedback. Also, Meta’s business model is not to sell the use of its LLMs, unlike OpenAI, so it is not risking its business when open sourcing the code. In fact, by putting Llama in open source, Meta favours its adoption at the expense of rivals, particularly ChatGPT. If Meta succeeded in making Llama the industry standard, this would mean that more developers build solutions on top of it. Meta’s innovations would then be easier to integrate, potentially creating an ecosystem around Llama, just like Google did with Android. Finally, Zuckerberg has said that open sourcing Llama keeps employees happy and further attracts talent to Meta, at a time when Meta’s reputation and workers’ satisfaction were compromised during the massive layoffs of 2023.

What Zuckerberg did not say is that Meta depends on other Big Tech firms to make Llama work. Lacking its own public cloud, Meta’s LLMs run on AWS, Google Cloud and Microsoft Azure. Although the models are in open source, accessing them has an indirect fee in terms of renting compute processing power. And there is a hidden clause that states that companies with more than 700 million active users must get a separate license from Meta. This is aimed at preventing other companies from developing a too big business with these LLMs without informing Meta and paying Meta an additional fee.

Both in the case of Meta’s LLMs and OpenAI we get a glimpse of the centrality of the cloud for AI, from its inception until application. It would have been impossible for OpenAI to train and run its models in-house; it required too many interconnected super-powerful cutting-edge processors, impossible to access without Big Tech. The relationship between Microsoft and OpenAI exemplifies how cloud giants relate to smaller companies developing frontier LLMs. Start-ups and other AI mid-size companies are obliged to rely on public clouds because without processing power, data and the engineering knowledge required to set those processors to train and run LLMs, the AI would not deliver. The fact that Microsoft also offers Llama on its cloud while its agreement with OpenAI prevents the latter from offering the ChatGPT service on other clouds gives further proof of power asymmetries.

In short, it is in the best interest of Big Tech to put in open source pieces of knowledge that they still control and integrate with other secretly kept pieces. Open sourcing also increases success rates and minimises Big Tech innovation risk while they keep turning vital knowledge modules into their intangible assets. Partly unwittingly, even the open-source community that was fostered to counterbalance large tech companies’ power has been co-opted and the overall frontier AI field lays at the feet of Big Tech. What all these experiences have in common is that Big Tech controls without owning technologies and other organisations. Because of the centrality of AI for every sphere of life, there is an urgency to think of alternatives.

[.green]7[.green] From Regulating Big Tech Controlled AI to Producing the AI That Society and the Planet Need

The choice is not between accepting the dominance of Big Tech within AI development or rejecting the use of AI entirely. A viable alternative requires both regulating Big Tech and the AI they promote and, just as importantly, designing institutions that promote the development of AI that put people ahead of profits.

Much of the policy discussion since the release of ChatGPT has centred around the agency of foundation AI models, and even Big Tech and OpenAI’s top management has advocated for regulating AI uses. This diverts public attention from regulating what type of AI is coded, by whom and who profits from it. Regulating the technology is not regulating its monopolisation, something that has still not been addressed by antitrust offices.

While the US executive AI order “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” only focuses on the risks and harmful uses of AI, the European AI Act establishes obligations and transparency requirements for general purpose AI models. This is where the greatest difficulties are imposed on Big Tech companies. This certainly does not dismantle Big Tech’s control over the whole AI value chain, but at least holds them accountable for how their technology is used. However, Big Tech and their satellites like OpenAI will find it easier to comply with these regulations because they have the resources to do so, which is not necessarily the case for the other organisations working on LLMs or other generative AI models. Also, the decision to leave open-source models outside of this scrutiny directly favours Meta and indirectly benefits Microsoft, Amazon and Google, given that Llama runs on their clouds.

All the existing regulations miss the chance to require making public foundation models’ training data, as well as the indicators, parameters and variables that are used to manage it. Nor do existing regulations cover what companies like OpenAI do with the data that are entered as prompts to interact with their LLMs. And because these regulations were not accompanied by reforms in antitrust, they missed the chance to spot and regulate the uses of corporate venture capital for steering and controlling AI start-ups.

Against this backdrop, in which the most likely scenario is that AI will remain controlled by a few US giants, there is an urgent need to offer public alternatives to the for-profit development of AI. The following sections offer three areas ways to explore developing AI for public good. AI is not simply the cutting-edge technology of our time. It is a general-purpose technology, which means that it can be applied widely to the most diverse uses. And because it is a new method of invention, it has direct impacts on how scientific and technological investigations take place.

AI for addressing major challenges

AI is particularly useful for processing and synthesising large amounts of information, with multiple possible applications for addressing global challenges.

For example, AI could be deployed in planning the green transformation, both strategically and for solving specific chokepoints. Renewable energy management is made more efficient by centralising data about supply and demand and processing it with AI. Cloud giants are already offering computing services for this purpose while gathering energy data. Instead, the state could be a steward of energy data sources and could use AI for public smart grids aimed at providing a more efficient, cheaper and socially-just distribution of energy. Predictive maintenance of forests and more generally natural parks, using computer vision and other AI models could improve wildfire predictions and inform on the best species of trees to be planted according to the type of soil and forest. Applying AI for these and other social and environmentally beneficial purposes[24] should not be confined to market solutions, among others, because of a lack of private incentives for adoption coupled with the urgency of tackling the ecological breakdown.

Many applications of AI for the environment do not require personal data. They could rely on, for instance, weather data that are or could be accessed for research purposes. Currently, the same companies with the largest proprietary personal datasets are developing models with European public data. In November 2023, Google published a paper in the journal Science with the results of GraphCast, an AI model trained with past weather data from the European Centre for Medium-Range Weather Forecasts (ECMWF). Even if the model is put in open source, Big Tech expands their knowledge on AI as they train models with public datasets. The resulting model can be adjusted or form the basis of other models then sold as a cloud service. Today, computing services for weather forecasts are already available on Big Tech clouds and public ECMWF data and GraphCast could be used to improve those services.

Public data should not be shared with companies that harvest data from the internet and then keep it secret. Reciprocity should prevail. If tech companies want to access public data, they need to be reciprocal and share their datasets with the wider community for public research purposes.

Another application for AI is in healthcare. Today, healthcare digitalisation is driven by self-control and self-discipline, with wearables nudging people and making them individually responsible for their health and wellbeing. Instead of seeing healthcare as a social responsibility, these devices further individualise it. But there are other alternative uses of AI for healthcare that could bring larger social benefits. Research has shown that the global health and biomedical sciences agenda is dominated by investigations on cancer and cardiovascular diseases approached from the field of molecular biology. Investigations on neglected diseases, pathogenic viruses, bacteria or other microorganisms, and biological vectors were marginal at least until 2020, the period covered by this research.[25]

While some of the latter changed with the pandemic, something that has not changed is that research on prevention, social determinants of health and assessment of socio-environmental factors influencing disease onset or progression remain overlooked. The approach that dominates, both in terms of privileged areas receiving private and public funding for research, is the therapeutic and specifically pharmacological intervention with drugs. There is, then, a lot of space for changing the approach towards a more holistic perspective. From focusing on treatments and looking at molecular degeneration, one could envision research that holistically synthesises multiple data sources to consider the social determinants of health and assesses socio-environmental factors influencing disease onset or progression. This would require an interdisciplinary team, using complementary qualitative methodologies to make sense of AI-powered results and access to healthcare data.

Data solidarity

AI requires data and this is one of the advantages of Big Tech; they have been amassing freely harvested data from citizens and organisations wide and large for decades. Opposing this free data harvesting with data privacy could, to some degree and if the companies comply with the regulation, put limits to their spoilage. However, it will not build alternatives to use and develop technologies that are not mainly driven by profits but, above all, good for the people, other living beings and nature.

Public databases, such as healthcare datasets, should be built on the principle of data solidarity. The notion of data solidarity refers to the decision to share data and information between actors and countries. As Kickbusch and Prainsack explain, in the Covid-19 pandemic, a false dichotomy was installed between respect for the privacy and freedom of individuals and the protection of health.[26] This dichotomy is false because individual freedoms require collective goods, commons that enable the realisation of those individual freedoms, and vice versa.

Anonymised health data offer an example. Under proper regulation and governance, its access could not only help to address pandemics and other global crises, but also to identify courses of action to improve the living conditions of the population. Healthcare data processed with AI could also provide evidence to improve prevention, diagnosis, treatment and care delivery. In short, it is desirable to promote data solidarity, especially to address critical situations such as ecological and health crises. The social costs, among others in terms of lives, and environmental costs of limiting access to data may be too great.

Data governance for these purposes could be placed in the custody of the World Health Organisation. The use of extracts from health databases should be guaranteed and facilitated for research purposes while preserving anonymity. The WHO role could be to ensure that data are shared only when their analysis is associated with investigations that contribute to increasing the public good and whose results remain in the public sphere. A related promising project could be to centralise Electronic Healthcare Records internationally or inside Europe. The project itself requires experts in computing science as well as public health policy experts, researchers from healthcare and biomedical sciences and social scientists.

Instead of moving towards this direction, the UK government has increasingly provided access to NHS data to large tech companies, providing them a fundamental resource for advancing in the commodification of healthcare. Amazon had a deal with the NHS to provide its Alexa devices to hospitals, potentially harvesting their healthcare data. By the end of 2023, the NHS granted the tech surveillance company Palantir with a £480 million contract to run its data platform. No wonder Google has included the NHS on its recently created strategic partners’ list for healthcare in Europe and is developing an LLM tailored for healthcare while trying to strike a deal with the NHS to apply it. Microsoft is researching on similar applications judging by its scientific publication entitled “Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine”.

Beyond healthcare and other cases in which citizens could be offered the chance to share their data with states when the latter if providing them a service, data solidarity shall also prevail in public procurement contracts. States should require companies providing public services to share with municipalities and councils the data they harvest as a side-product, which of course must be gathered with the informed consent of those producing it. These data could contribute to improving those services. For instance, data on the routes of rented bikes could provide information about where to prioritise the installation of bike lanes and where to place bike stations.

In all these and other potentially beneficial uses, data solidarity can only work if citizens are adequately informed about what data will be harvested and what are authorised uses and type of users of that data. This requires short and explicit statements that inform citizens. The NHS app, for instance, could include a pop up in which UK citizens are asked if they want to share their data clearly specifying what data they will be sharing, their uses, who will get access and offering them the chance to get access to the research findings. This pop ups must provide easy ways to opt out, forbidding convoluted communications such as those frequently used to discourage us from disabling cookies. Personal data or data that can result in forms of surveillance and control should never be gathered (such as data about the individuals who are renting public bikes).

Large collaborations for AI for the common good

Currently, it is impossible to conceive of the production of foundation AI models without Big Tech. No single existing institution or organisation can afford to produce LLMs or other advanced AI models without relying on technologies controlled by Amazon, Microsoft or Google which could be extended to include AI GPUs from Nvidia, though the three cloud giants are also designing their own AI semiconductors. Relying on other tech giants, would not much difference in terms of technological subordination, with the exception that other tech giants will probably not deliver frontier technologies, which are in the hands of those US Big Tech. States must build independent alternatives in the aim is to develop AI for the common good.

AI requires a lot of people working together. The OpenAI’s report introducing GPT4 was authored by 276 employees. The report includes a special acknowledgement to Microsoft, because some of its employees also contributed to developing GPT4, and to adversarial testers and red teamers (OpenAI, 2023).[27] If over 300 people are needed to develop an LLM, it is unreasonable to expect a start-up or university doing it by itself.

The development of alternative foundational AI models will not be funded by venture capital, which is controlled by Amazon, Microsoft and Google and funnelled into their own research priorities. Only public funding can support transformative AI that puts social and environmental challenges first.

A new public research institution to support the development of foundation AI models should ideally be an international collaboration. It should develop models for the public good, taking into consideration the environmental, social and cultural impacts of AI, freed from the imperative to make a profit.

This institution will need to bring talent back from Big Tech. This would require the five “Ps”:

- A clear purpose or mission.

- Sufficient processing power, that could also be used widely for other public research projects.

- Access to public datasets, achieved through data solidarity.

- Adequate pay (which may involve an increase in academic salaries across the board).

- Peers because foundational AI cannot be produced in isolation or in small groups with a principal investigator working with younger scholars. It requires hundreds of experienced people, peers, working together.

[.green]8[.green] Conclusion

The degree of control beyond ownership of the AI value chain, from research to adoption, justifies drastic and urgent measures. The suggestions above are only a first step and should be debated alongside other proposals in democratic spaces. Above all, societies must democratically and openly discuss what types of AI should be developed, by whom and for what purposes. This is inextricable from agreeing on what type of data will be harvested and how it will be governed (who will decide accesses, how it will be accessed, etc.). The time is now to shape technologies and redistribute their associated gains, because the more Big Tech-controlled AI is used and developed, the harder it will be to advance an alternative. And these current forms of AI, because they put profits first, will ultimately replace labour where it is cheaper to do so, be used to control workers that keep their jobs under the threat of being replaced, foster inequalities and further destabilise the critical times we live in.

A final note of caution about the risk of technological solutionism or determinism. AI could help us solve major global challenges, but neither AI nor any other technology will solve those problems by itself. What kind of life we want to live is a political decision; technology can, at most, unlock possible better futures. Without democratic control of AI, those better futures will never come to fruition.

Methodological note

[.fig]Figure 1: Network of main investors in the AI start-ups receiving funding since ChatGPT[.fig]

[.notes]Source: Author’s analysis based on Crunchbase. Data retrieved by February 2024. Download this figure as a pdf here.[.notes]

For Section Three, I used Crunchbase to retrieve a dataset with all the AI companies that have received funding since November 30, 2022, the day when ChatGPT was released. Crunchbase is a database aimed at providing venture capital business information about private and listed innovating companies, particularly start-ups.

The data were processed using the CorText platform,[28] which allowed us to build network maps by using specific algorithms that associate entities according to their frequency of co-occurrence within a corpus.[29] To build the network in this report, the Louvain community detection algorithm was applied as the cluster detection method.[30] To focus on the most influential investors, instead of mapping the whole network of AI companies’ top investors, I prioritised the 150 entities with the highest co-occurrence frequency. I used the chi-square proximity measure to determine nodes and edges to be considered in the network map. This is a direct local measure, meaning that it considers actual occurrences (investments in the same AI companies) between entities. To define the direct ties (edges), chi-square normalisation prioritises links towards higher degree nodes; these are the most frequent co-occurrences (funding the same AI companies) within the network. It thus privileges the strongest links for each organisation, so edges can be interpreted as an indicator of closeness between organisations.

Crunchbase provides the technological fields or industries (not distinguished in Crunchbase) where each firm operates, following a classification made by Crunchbase and firms themselves. I listed the frequency of appearance of all the technological fields or industries and plotted the most frequent ones for each cluster in Figure 1.

I also retrieved from Crunchbase information about corporate venture capital for selected tech giants by February 24 2024. I looked at all the companies that had one of my selected tech giants among their top five investors and then filtered this list to those that are still private firms and that have not been delisted. Table 1 presents the resulting number of top funded companies for each large tech corporation.

Appendix: Corporate venture capital in the tech sector

Looking not only at AI start-ups but at the whole start-up universe, the relevance of corporate venture capital becomes all the more apparent (see Table 1). The practice is especially used by Google, Intel and Microsoft, followed by Alibaba, Samsung and Tencent. Table 1 only provides data for the number of companies that have a large tech firm among their top five investors by February 2024, so the specific figures are far from set in stone. However, besides specific figures, Big Tech has been using venture capital as a means to control start-ups for years.

[.fig]Table A1: Main Corporate VC Investments. Selected Firms.[.fig]

[.notes]Source: Author’s analysis based on Crunchbase – data retrieved by late February 2024.[.notes]

Although there is ample evidence of corporate venture capital as a standard practice among most large tech companies, Apple is a notable exception. Far from indicating a weakness, this probably speaks of the nature of its spheres of control. Apple is an extremely sealed corporation that relates to other organisations and captures value from them requiring similar levels of hermeticism.

[1] “Annual Report 2023 — Form 10K”, Alphabet, 2024.

[2] Richard Phillips, Hannah Petersen and Ronen Palan, “Group subsidiaries, tax minimisation and offshore financial centres: Mapping organisational structures to establish the ‘in-betweener’ advantage”, Journal of International Business Policy, October 2020, vol. 4, pp. 286-307.

[3] Brian Callaci, “Control Without Responsibility: The Legal Creation of Franchising, 1960–1980”, Enterprise & Society, February 2020, vol. 22, pp. 156-182.

[4] Cédric Durand and William Milberg, “Intellectual monopoly in global value chains”, Review of International Political Economy, July 2018, vol. 27, pp. 404–429.

[5] Cédric Durand, Technoféodalisme: Critique de l’économie numérique, Zones: 2020; Ugo Pagano, “The crisis of intellectual monopoly capitalism”, Cambridge Journal of Economics, November 2014, vol. 38, pp. 1409–1429; Cecilia Rikap, Capitalism, Power and Innovation: Intellectual Monopoly Capitalism Uncovered, Routledge: 2020.

[6]Brett Christophers, Our Lives in Their Portfolios: Why Asset Managers Own the World, Verso Books: 2023; Nick Srnicek, “Value, rent and platform capitalism”, In Julieta Haidar and Maarten Keune (eds.), Work and Labour Relations in Global Platform Capitalism, Edward Elgar Publishing: 2021, pp. 29–45.

[7] Md Zahangir Alom et al., “The history began from alexnet: A comprehensive survey on deep learning approaches”, arXiv Preprint arXiv:1803.01164, 2018. Available here; Iain M. Cockburn et al., “The impact of artificial intelligence on innovation”, National Bureau of Economic Research, 2018. Available here.

[8] Multi-cloud, hybrid cloud and cloud agnostic solutions are being used. They are more expensive; thus, the cost savings of the cloud disappear, but these alternatives grant more bargaining power when negotiating cloud contracts.

[9] These data were scraped by Facundo Lastra.

[10] On AWS pricing see here and here.

[11] It is worth noting that BERD is considered in company-level accounting as an expenditure. This is why, unlike investments in plant and equipment, BERD is deducted for profit calculations. If we instead compute it as an investment and do not deduct it from the operating income of these companies, their profits would be even greater.

[12] Cecilia Rikap and Bengt-Åke Lundvall, The Digital Innovation Race Conceptualising the Emerging New World Order, Palgrave Macmillan: 2020.

[13] Michael Gofman and Zhao Jin, “Artificial Intelligence, Education, and Entrepreneurship”, Journal of Finance, Forthcoming, August 2022.

[14] Cecilia Rikap, “Same End by Different Means: Google, Amazon, Microsoft and Facebook’s Strategies to Dominate Artificial Intelligence”, CITYPERC Working Paper Series, 2023.

[15] Ibid.

[16] Ibid.

[17] Joel Klinger et al., “A narrowing of AI research?”, arXiv Preprint arXiv:2009.10385, 2020, p. 1. Available here.

[18] Rikap, “Same End by Different Means: Google, Amazon, Microsoft and Facebook’s Strategies to Dominate Artificial Intelligence”, CITYPERC Working Paper Series.

[19] Affiliations appear as Google, Google Research, Google Brain and DeepMind.

[20] Richard Stallman, Free software, free society: Selected essays of Richard M. Stallman, Lulu. Com: 2002; Sam Williams, Free as in freedom (2.0): Richard Stallman and the free software revolution, Free Software Foundation: 2010.

[21] Vscode is a source-code editor for modern web and cloud applications.

[22] React-native is a user interface software for app development.

[23] All this information was extracted from GitHub’s annual report which is called Octoverse and is available online. In the past, the report included more information, which is why it is not possible to update the figures that I have presented for 2018.

[24] A list of other potential uses can be found here.

[25] Federico E. Testoni et al., “Whose shoulders is health research standing on? Determining the key actors and contents of the prevailing biomedical research agenda”, PloS One, April 2021, vol. 16.

[26] Ilona Kickbusch and Barbara Prainsack, “Against data individualism: Why a pandemic accord needs to commit to data solidarity”, Global Policy, May 2023.

[27] OpenAI, “GPT-4 Technical Report”, arXiv Preprint arXiv:2303.08774, 2023. Available here.

[28] Elise Tancoigne, Marc Barbier, Jean-Phillippe Cointet, and Guy Richard, “The place of agricultural sciences in the literature on ecosystem services”, Ecosystem Services, December 2014, vol. 10, pp. 35–48.

[29] Marc Barbier, Marianne Bompart, Véronique Garandel-Batifol, and Andrei Mogoutov, “Textual analysis and scientometric mapping of the dynamic knowledge in and around the IFSA community”, in Ika Darnhofer, David Gibbon and Benoît Dedieu (eds.), Farming Systems Research into the 21st century: The new dynamic, Springer: 2012, pp. 73-94.

[30] Vincent D. Blondel, Jean-Loup Guillaume, Renaud Lambiotte, and Etienne Lefebvre, “Fast unfolding of communities in large networks”, Journal of Statistical Mechanics: Theory and Experiment, April 2008.